#Download winutils.exe install

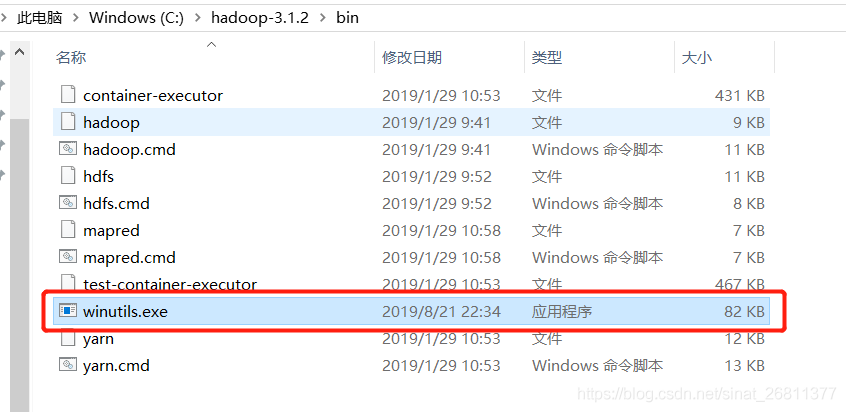

So, with this article, I hope to give you a useful guide to install Pyspark with no problems. To download Winutils.exe, click here Unzip the folder, Create a new folder in C Drive names as Hadoop. A helpfull collection of Windows utilities to close down and restart Windows at the click of a button (including an optional delay), close all (or selected) programs and windows, close programs after a specified time,perform cleanup tasks before closing. winutils.exe download changefilemodebymask error (3): the system cannot find the path specified.

Installing Pyspark on Windows 10 requires some different steps to follow and sometimes we can forget these steps. This is only in Windows, not in Mac or Linux. Windows utilities to close down and restart Windows at the click of a button.

#Download winutils.exe how to

Instead, in this article, I will show you how to install the Spark Python API, called Pyspark. If you are interested in knowing something more about them, in particular a topic like the Cloud Data Warehouse, let me suggest to you my article that you can find here. Today we can solve that problem with the services of cloud computing provided by AWS and Azure. Download the checksum hadoop-X.Y.mds from Apache. Download the release hadoop-X.Y. from a mirror site. Sets Authentication Azure CLI Build Azure VM Azure Data Lake Store. Many third parties distribute products that include Apache Hadoop and related tools. All processes still must have access to the native components: hadoop.dll and winutils.exe. shasum -a 512 hadoop-X.Y. All previous releases of Hadoop are available from the Apache release archive site. If we want to compare Apache Spark with Hadoop, we can say that Spark is 100 times faster in memory and 10 times faster on disk.įor w hat concerns on-perm installations Hadoop requires more memory on disk and Spark requires more RAM, that means setting up a cluster could be very expensive. Download the checksum hadoop-X.Y.sha512 or hadoop-X.Y.mds from Apache. Create Environment Variable with name 'HADOOPHOME', Advance Settings -> Environment Variables -> Click on New. The page lists the mirrors closest to you based on your location.

Go to download page of the official website: Apache Download Mirrors - Hadoop 3.3.0.

#Download winutils.exe for free

The two most famous cluster computing frameworks are Hadoop and Spark that are available for free as open-source. Download zip from the mentioned git link above, then unzip the downloaded file from git and then, copy the winutils.exe from the winutils-master\hadoop-2.7.1\bin folder to C:\Bigdata\hadoop\bin. Step 1 - Download Hadoop binary package Select download mirror link.

Moreover, to work effectively into the big data ecosystem, we also need a cluster computing framework which permits us to perform processing tasks quickly on large data sets. winutils.exe hadoop.dll and hdfs.dll binaries for hadoop windows - GitHub - cdarlint/winutils: winutils.exe hadoop.dll and hdfs.dll binaries for hadoop. When we work with Big Data, we need more computational power that we can get with a distributed system of multiple computers.

0 kommentar(er)

0 kommentar(er)